Tanzu Kubernetes Grid 1.5.2

업데이트:

Tanzu Kubernetes Grid 1.5.2 on vSphere

Caution: VMware recommends not installing or upgrading to TKG v1.5 until a future patch release, due to a bug in the versions of etcd in the versions of Kubernetes used by Tanzu Kubernetes Grid v1.5.0-v1.5.2. See Known Issues in the TKG v1.5 Release Notes.

설치 가이드

- vSphere 7.0 & Ubuntu 20.04 기준 작성

Download and Unpack the Tanzu CLI and kubectl

-

Go to https://my.vmware.com and log in with your My VMware credentials.

-

In the VMware Tanzu Kubernetes Grid row, click Go to Downloads.

-

In the Select Version drop-down, select 1.5.2.

- Under Product Downloads, scroll to the section labeled VMware Tanzu CLI 1.5.2 CLI.

- For Linux, locate VMware Tanzu CLI for Linux and click Download Now.

- Navigate to the Kubectl 1.22.5 for VMware Tanzu Kubernetes Grid 1.5.2 section of the download page.

- For Linux, locate kubectl cluster cli v1.22.5 for Linux and click Download Now.

-

(Optional) Verify that your downloaded files are unaltered from the original. VMware provides a SHA-1, a SHA-256, and an MD5 checksum for each download. To obtain these checksums, click Read More under the entry that you want to download. For more information, see Using Cryptographic Hashes.

-

On your system, create a new directory named tanzu. If you previously unpacked artifacts for previous releases to this folder, delete the folder’s existing contents.

- In the tanzu folder, unpack the Tanzu CLI bundle file for your operating system. To unpack the bundle file, use the extraction tool of your choice. For example, the tar -xvf command.

- For Linux, unpack tanzu-cli-bundle-linux-amd64.tar.

- After you unpack the bundle file, in your tanzu folder, you will see a cli folder with multiple subfolders and files.

- Unpack the kubectl binary for your operating system:

- For Linux, unpack kubectl-linux-v1.22.5+vmware.1.gz.

Install the Tanzu and Other Tools

- tanzu/cli 이동

- 시스템에서 CLI 사용

-

binary를 /usr/local/bin 위치에 설치

$ sudo install core/v1.3.1/tanzu-core-linux_amd64 /usr/local/bin/tanzu-

e.g.

[ubuntu@tkg-jumpbox /app/tkg/cli]$ $ sudo install core/v0.11.2/tanzu-core-linux_amd64 /usr/local/bin/tanzu [sudo] ubuntu의 암호: [ubuntu@tkg-jumpbox /app/tkg/cli]$

-

-

-

initialize the Tanzu CLI

[ubuntu@tkg-jumpbox /app/tkg/cli]$ $ tanzu init Checking for required plugins... All required plugins are already installed and up-to-date ✔ successfully initialized CLI [ubuntu@tkg-jumpbox /app/tkg/cli]$ -

Tanzu Kubernetes Grid v1.5.2는 Tanzu Framework v0.11.2를 사용

[ubuntu@tkg-jumpbox /app/tkg/cli]$ $ tanzu version version: v0.11.2 buildDate: 2022-03-17 sha: 9f16f375 [ubuntu@tkg-jumpbox /app/tkg/cli]$

Install the Tanzu CLI Plugin

Tanzu Kubernetes 클러스터 관리 및 기능 작업과 관련된 CLI 플러그인을 설치

-

기존 플러그인 제거

[ubuntu@tkg-jumpbox /app/tkg/cli]$ $ tanzu plugin clean [ubuntu@tkg-jumpbox /app/tkg/cli]$ -

cli 디렉토리로 이동

-

tanzu 디렉토리에서 다음 명령을 실행하여 모든 플러그인을 설치

[ubuntu@tkg-jumpbox /app/tkg/cli]$ $ tanzu plugin sync Checking for required plugins... Installing plugin 'login:v0.11.2' Installing plugin 'management-cluster:v0.11.2' Installing plugin 'package:v0.11.2' Installing plugin 'pinniped-auth:v0.11.2' Installing plugin 'secret:v0.11.2' Successfully installed all required plugins ✔ Done [ubuntu@tkg-jumpbox /app/tkg/cli]$ -

설치된 플로그인 확인

[ubuntu@tkg-jumpbox /app/tkg/cli]$ $ tanzu plugin list NAME DESCRIPTION SCOPE DISCOVERY VERSION STATUS login Login to the platform Standalone default v0.11.2 installed management-cluster Kubernetes management-cluster operations Standalone default v0.11.2 installed package Tanzu package management Standalone default v0.11.2 installed pinniped-auth Pinniped authentication operations (usually not directly invoked) Standalone default v0.11.2 installed secret Tanzu secret management Standalone default v0.11.2 installed [ubuntu@tkg-jumpbox /app/tkg/cli]$

Install kubectl

-

위의 kubectl-linux-v1.22.5+vmware.1.gz 압축 해제한 디렉토리 이동

-

binary를 /usr/local/bin 위치에 설치

[ubuntu@tkg-jumpbox /app/tkg]$ $ chmod ugo+x kubectl-linux-v1.22.5+vmware.1 [ubuntu@tkg-jumpbox /app/tkg]$ $ sudo install kubectl-linux-v1.22.5+vmware.1 /usr/local/bin/kubectl [ubuntu@tkg-jumpbox /app/tkg]$ $ kubectl version Client Version: version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.5+vmware.1", GitCommit:"8ef4fc5015d7c770c6ac367fcb1d13ed4e99d974", GitTreeState:"clean", BuildDate:"2021-12-17T23:12:22Z", GoVersion:"go1.16.12", Compiler:"gc", Platform:"linux/amd64"} Server Version: version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.5+vmware.1", GitCommit:"f4553304874c3b89584280a5ac1b005d57c725a8", GitTreeState:"clean", BuildDate:"2021-03-22T16:56:50Z", GoVersion:"go1.15.8", Compiler:"gc", Platform:"linux/amd64"} WARNING: version difference between client (1.22) and server (1.20) exceeds the supported minor version skew of +/-1 [ubuntu@tkg-jumpbox /app/tkg]$

Install the Carvel Tools

Carvel은 애플리케이션 구축, 구성 및 Kubernetes 배포를 지원하는 안정적인 단일 목적의 구성 가능한 도구 세트를 제공.

Tanzu Kubernetes Grid는 Carvel 오픈 소스 프로젝트의 다음 도구를 사용.

- ytt - a command-line tool for templating and patching YAML files. You can also use ytt to collect fragments and piles of YAML into modular chunks for easy re-use.

- kapp - the applications deployment CLI for Kubernetes. It allows you to install, upgrade, and delete multiple Kubernetes resources as one application.

- kbld - an image-building and resolution tool.

- imgpkg - a tool that enables Kubernetes to store configurations and the associated container images as OCI images, and to transfer these images.

Install ytt

-

압축 해제 및 /usr/local/bin으로 복사

[ubuntu@tkg-jumpbox /app/tkg/cli]$ $ gunzip ytt-linux-amd64-v0.35.1+vmware.1.gz $ chmod ugo+x ytt-linux-amd64-v0.35.1+vmware.1 $ sudo mv ./ytt-linux-amd64-v0.35.1+vmware.1 /usr/local/bin/ytt [ubuntu@tkg-jumpbox /app/tkg/cli]$

Install kapp

-

압축 해제 및 /usr/local/bin으로 복사

[ubuntu@tkg-jumpbox /app/tkg/cli]$ $ gunzip kapp-linux-amd64-v0.42.0+vmware.1.gz $ chmod ugo+x kapp-linux-amd64-v0.42.0+vmware.1 $ sudo mv ./kapp-linux-amd64-v0.42.0+vmware.1 /usr/local/bin/kapp [ubuntu@tkg-jumpbox /app/tkg/cli]$

Install kbld

-

압축 해제 및 /usr/local/bin으로 복사

[ubuntu@tkg-jumpbox /app/tkg/cli]$ $ gunzip kbld-linux-amd64-v0.31.0+vmware.1.gz $ chmod ugo+x kbld-linux-amd64-v0.31.0+vmware.1 $ sudo mv ./kbld-linux-amd64-v0.31.0+vmware.1 /usr/local/bin/kbld [ubuntu@tkg-jumpbox /app/tkg/cli]$

Install imgpkg

-

압축 해제 및 /usr/local/bin으로 복사

[ubuntu@tkg-jumpbox /app/tkg/cli]$ $ gunzip imgpkg-linux-amd64-v0.18.0+vmware.1.gz $ chmod ugo+x imgpkg-linux-amd64-v0.18.0+vmware.1 $ sudo mv ./imgpkg-linux-amd64-v0.18.0+vmware.1 /usr/local/bin/imgpkg [ubuntu@tkg-jumpbox /app/tkg/cli]$

Prepare to Deploy Management Clusters to vSphere

-

tanzu cli, docker, kubectl이 설치되어 있는 jumpbox.

- network

- vSphere에 배포하는 모든 클러스터에는 Kube-Vip이 API 서버 endpoint에 사용할 고정 IP 필요.

- management-cluster를 배포할때 이 고정 IP를 사용.

- vip는 dhcp의 range가 아닌, dhcp range와 동일한 subnet에 있어야함.

- image template

- 클러스터를 vSphere에 배포하기 전에 클러스터 노드가 실행되는 OS 및 Kubernetes 버전이 포함된 기본 이미지 템플릿을 vSphere로 가져와야함.

- OVA를 vSphere로 가져온 후 결과 VM을 VM 템플릿으로 변환.

- management cluster : OVA에는 Tanzu Kubernetes Grid v1.5의 기본 버전인 Kubernetes v1.22.5가 있어야함.

- Ubuntu v20.04 Kubernetes v1.22.5 OVA

- Photon v3 Kubernetes v1.22.5 OVA

- workload cluster : OVA는 Tanzu Kubernetes 릴리스에 패키지된 형태로 지원되는 OS 및 Kubernetes 버전이면됨. 다양한 kubernetes 버전으로 Tanzu Kubernetes 클러스터를 배포할 수 있음.

- image template 추가 가이드는 skip.

- create an SSH key pair

- bootstrap machine에서 ssh-keygen command 활용

$ ssh-keygen -t rsa -b 4096 -C "jongha0212@gmail.com"- SSH 에이전트에 개인 키를 추가

$ ssh-add ~/.ssh/id_rsa-

에러 발생 시 : Could not open a connection to your authentication agent.

root@tkg-jumpbox:~/.ssh# ssh-add ~/.ssh/id_rsa Could not open a connection to your authentication agent. root@tkg-jumpbox:~/.ssh# eval $(ssh-agent) Agent pid 180371 root@tkg-jumpbox:~/.ssh# ssh-add ~/.ssh/id_rsa Identity added: /root/.ssh/id_rsa (jongha0212@gmail.com) root@tkg-jumpbox:~/.ssh#

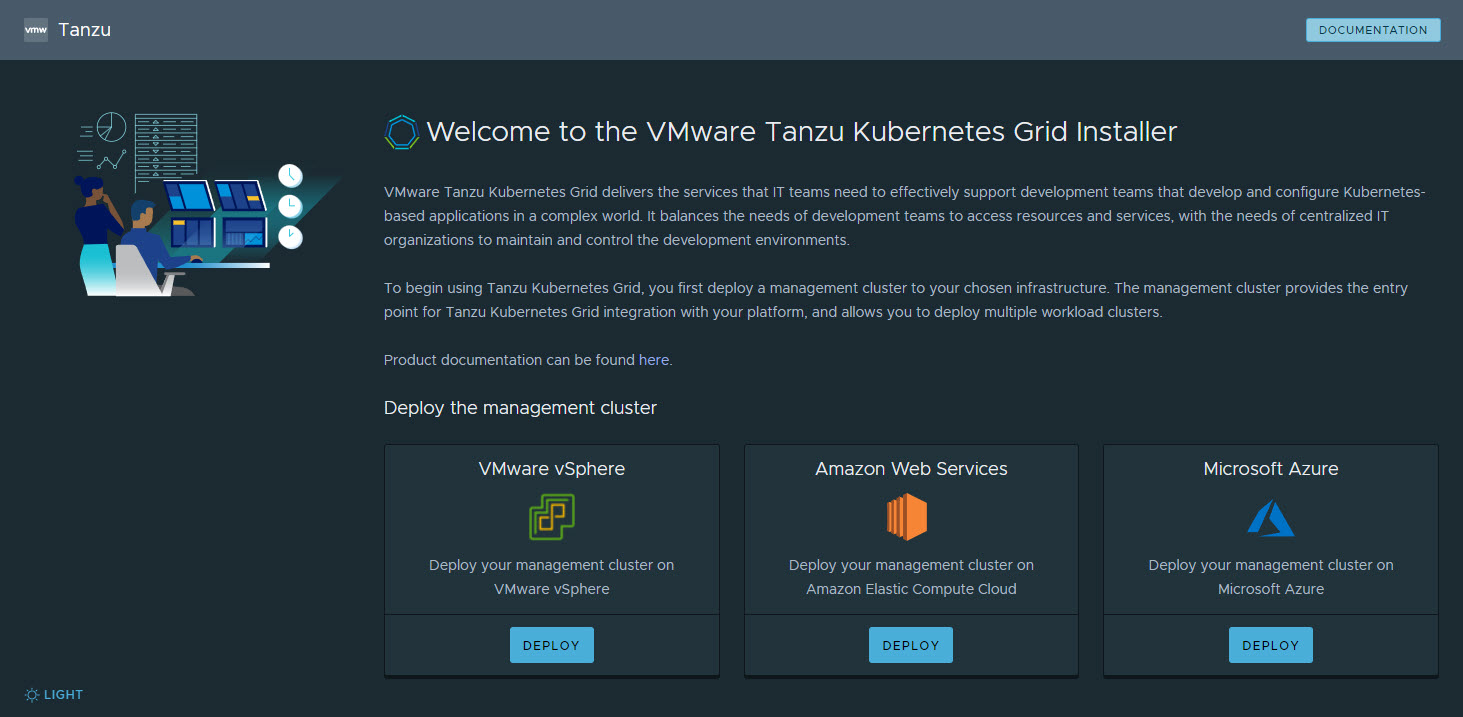

Deploy Management Clusters

-

tanzu mc create cli 실행.

[ubuntu@tkg-jumpbox /app/tkg/cli]$ $ tanzu mc create --ui --bind 192.168.230.25:8080 --browser none Validating the pre-requisites... Serving kickstart UI at http://192.168.230.25:8080 -

Management Cluster 배포할 Infrastructure Provider 선택

-

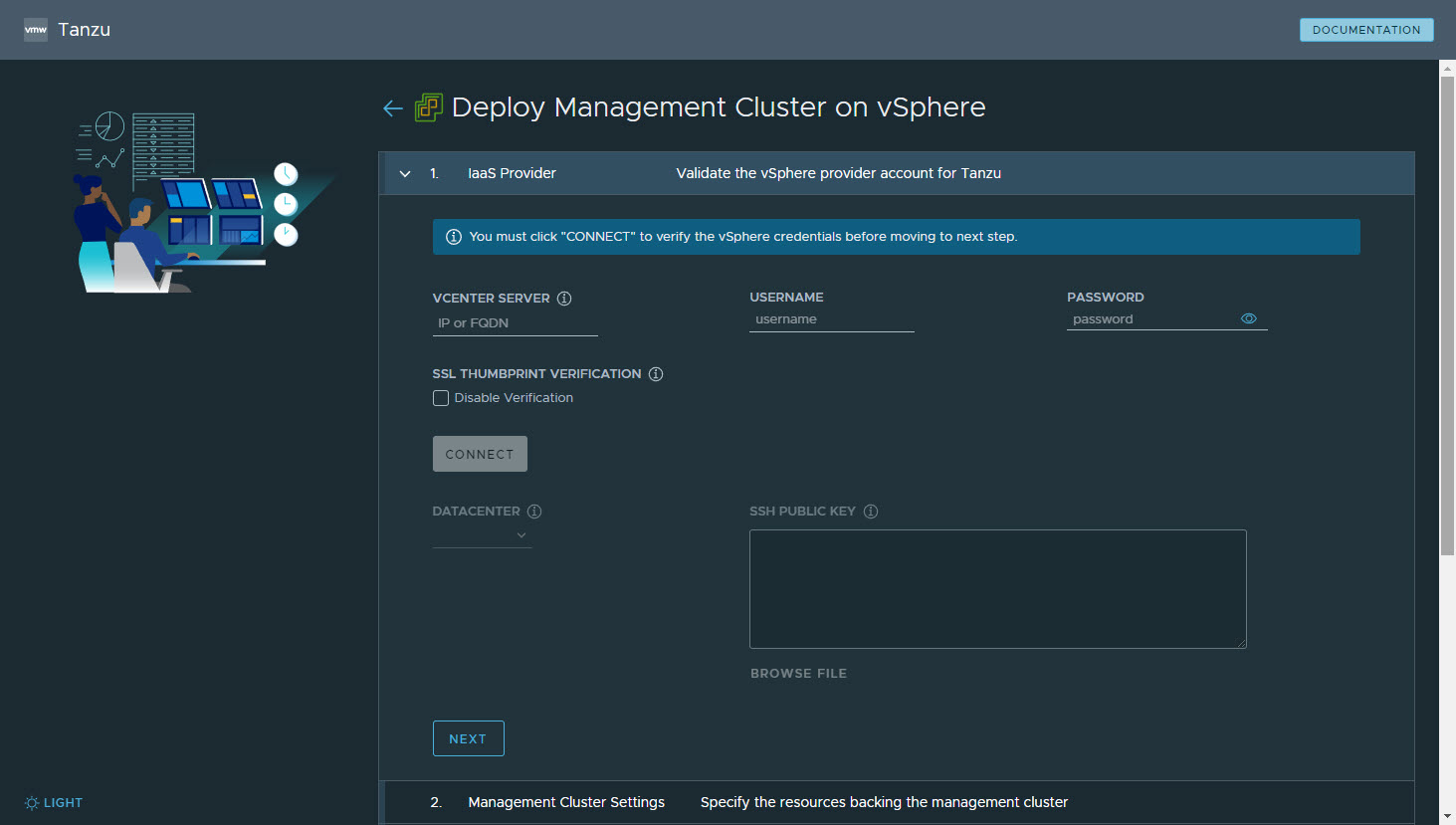

Infrastructure Provider 구성

- VCENTER SERVER : vCenter IP of FQDN

- USERNAME : VM 생성 및 설정 권한이 있는 계정

- PASSSWORD : 비밀번호

- SSL PUBLIC KEY : JumpBox의 ssh public key

-

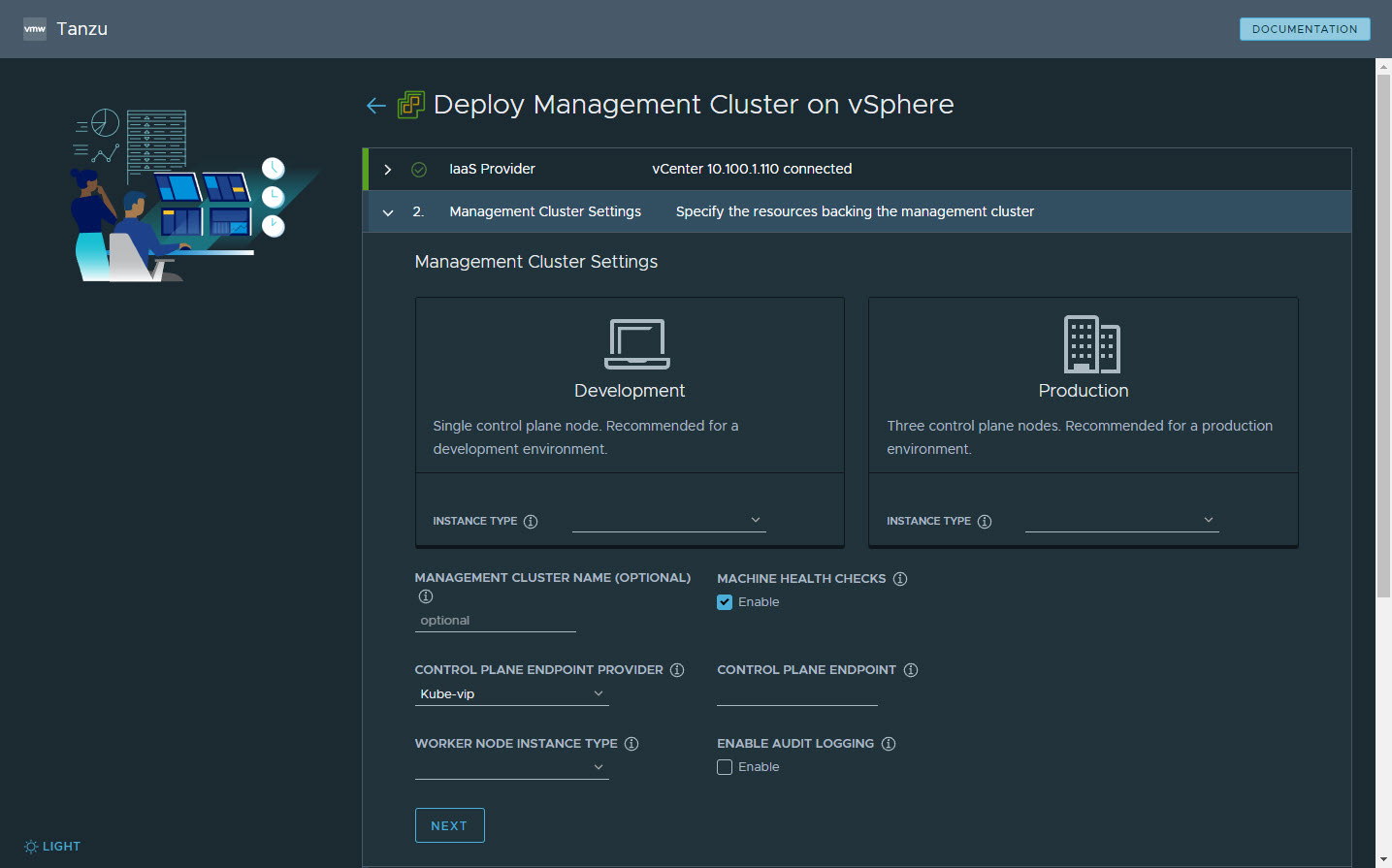

Management Cluster 설정

- Development or Production : single control plane vs three control plane

- INSTANCE TYPE : small, midium, large, extra-large 중 적당한 크기 선택 (control plane)

- MANAGEMENT CLUSTE NAME : mangetment cluster name

- MACHINE HEALTH CHECK : machine 이상 유무 확인 후 자동 복구 기능

- CONTROL PLANE ENDPOINT PROVIDER : nsx lb가 없으면 기본 ‘kube-vip’ 선택

- CONTROL PLANE ENDPOINT : kube-vip 설정

- WORKER NODE INSTANCE TYPE : small, midium, large, extra-large 중 적당한 크기 선택

- ENABLE AUDIT LOGGING : kubernetes audit log는 활성화하고 fluent-bit & elasticsearch와 연계

-

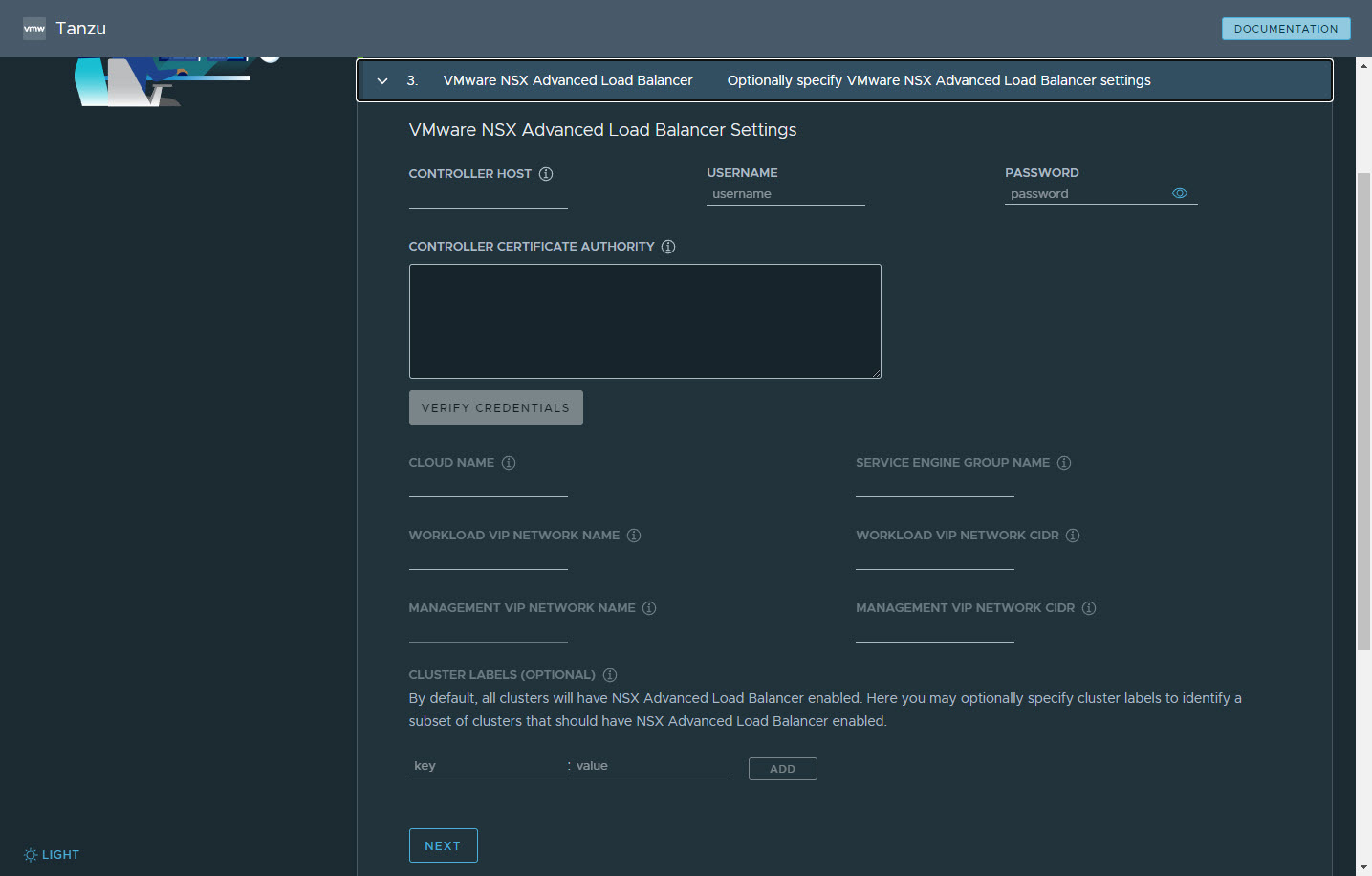

VMware NSX Advanced Load Balancer

- VMware NSX ALB(Advanced Load Balancer)는 vSphere용 L4+L7 로드 밸런싱 솔루션을 제공.

- NSX ALB에는 Kubernetes API와 통합되어 워크로드에 대한 로드 밸런싱 및 수신 리소스의 수명 주기를 관리하는 Kubernetes 운영자가 포함되어 있음.

- NSX ALB를 사용하려면 먼저 vSphere 환경에 배포해야 함.

- 테스트 환경에는 nxs가 없으므로 skip.

-

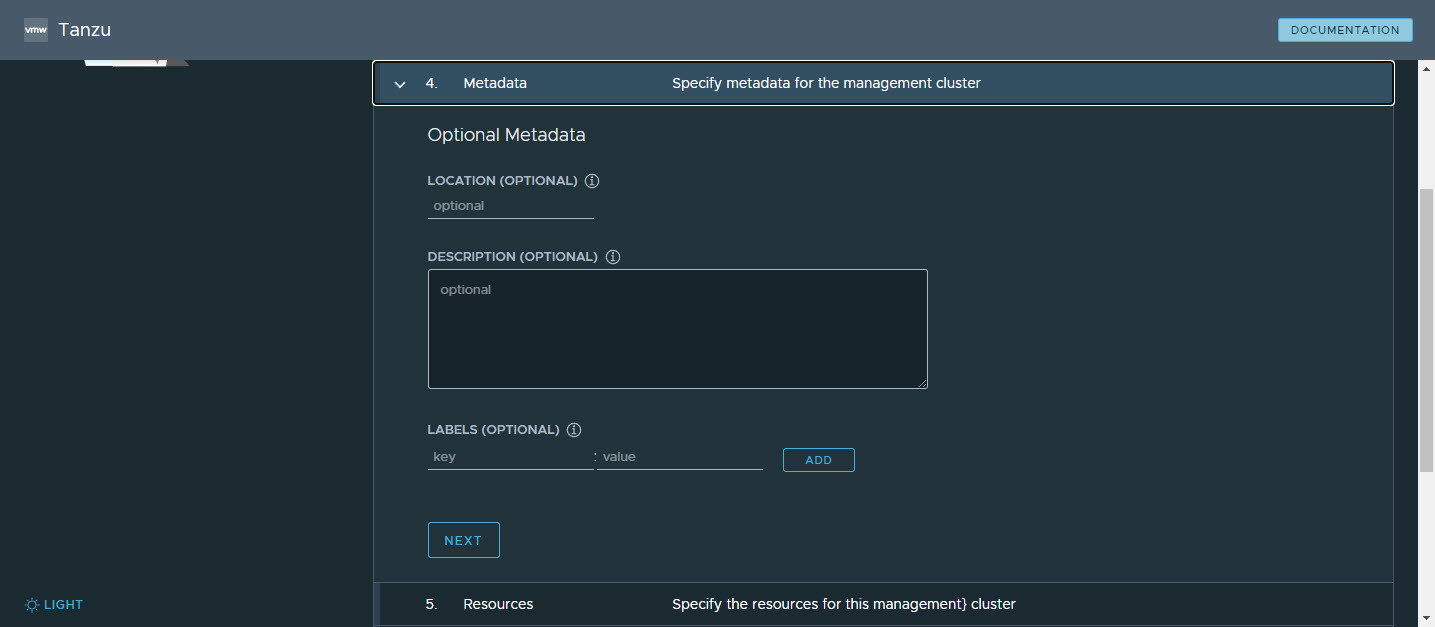

Metadata

- LOCATION : cluster가 실행되는 위치.

- DESCRIPTION : mgmt cluster에 대한 설명.

- LABELS : 사용자가 cluster를 식별하는 key/value

- 옵션으로 기입 안해도 무방함.

-

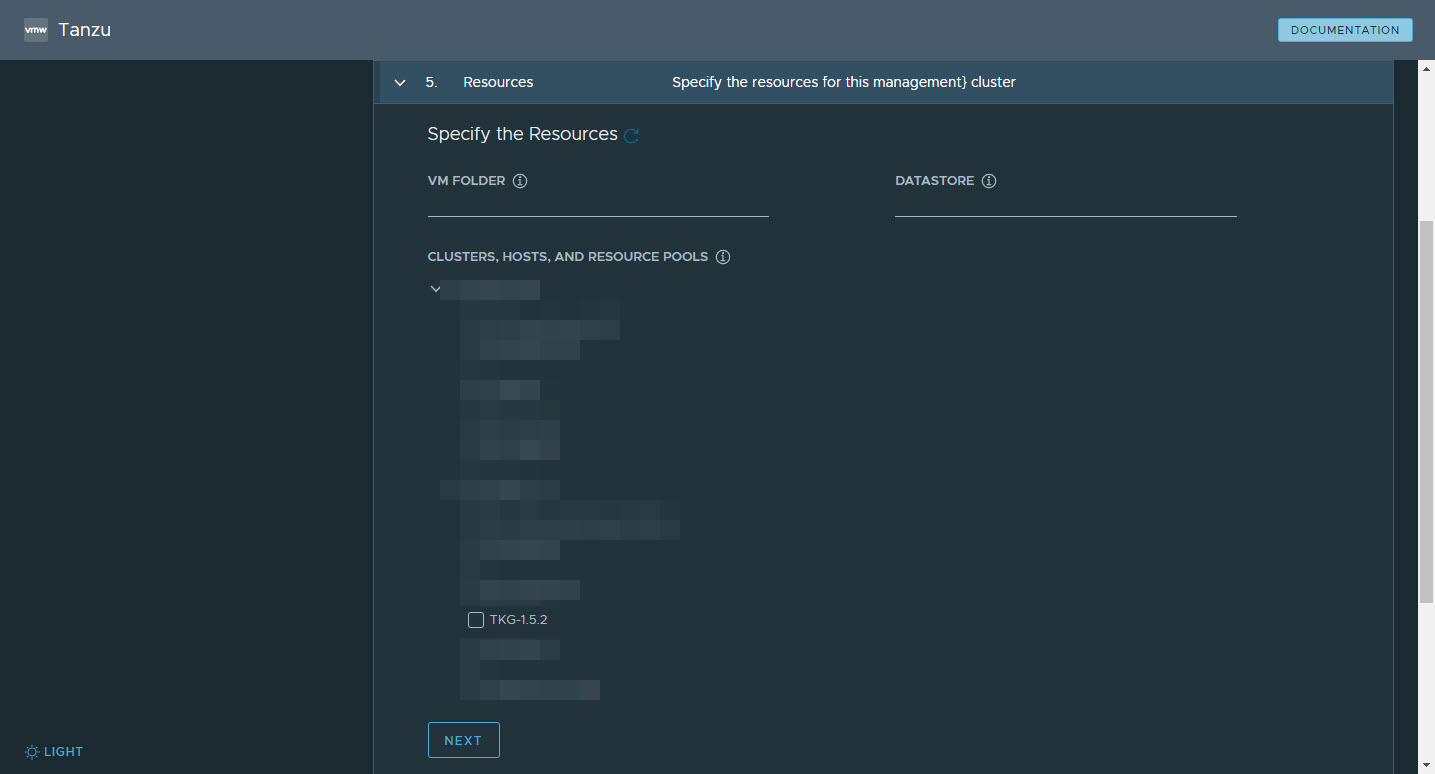

Resources

- VM FOLDER : mgmt cluster vm 폴더 선택

- DATASTORE : vSphere 데이터 저장소 선택

- CLUSTERS, HOSTS, AND RESOURCE POOLS : 리소스 풀 선택

-

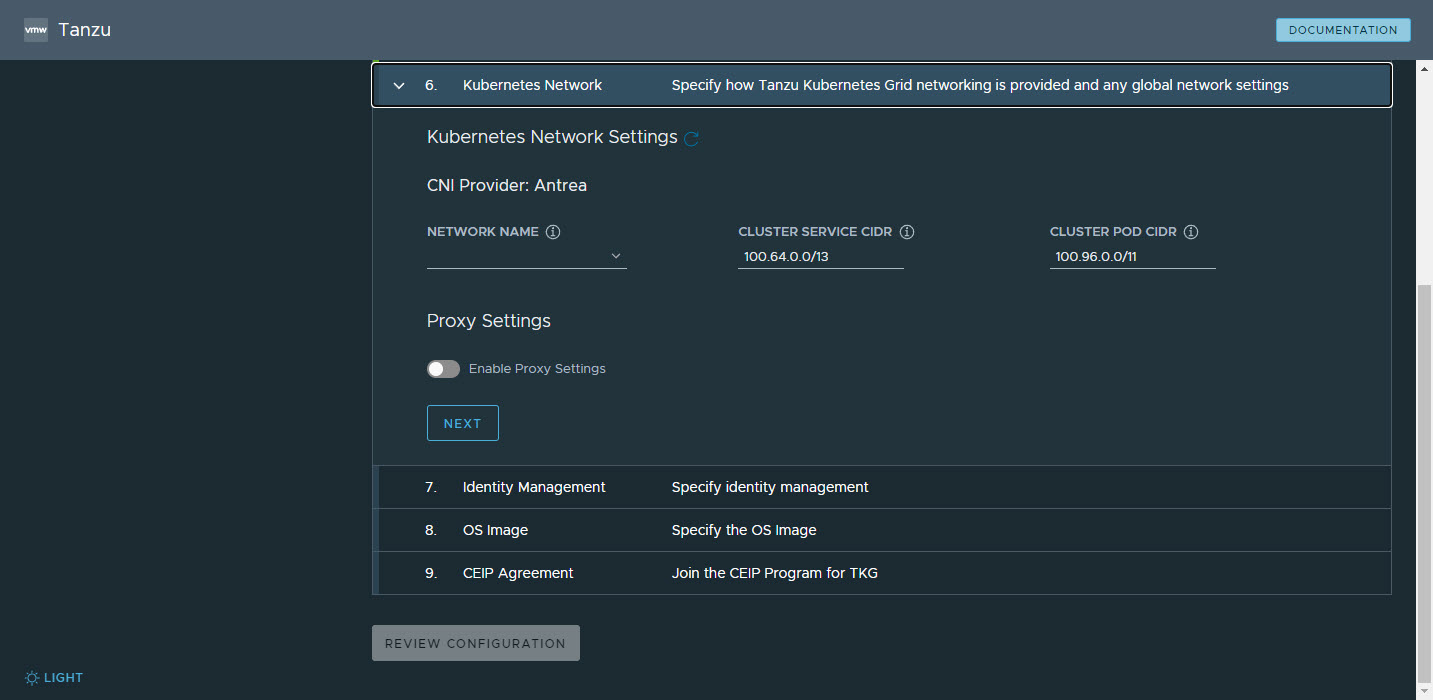

Kubernetes Network

- kubernetes 서비스에 대한 network 구성

- CNI Provider : Antrea (tkg default)

- NETWORK NAME : kubernetes 서비스 네트워크로 사용할 vSphere 네트워크를 선택

- CLUSTER SERVICE CIDR & CLUSTER POD CIDR : 기본 CIDR 범위 사용 권장

-

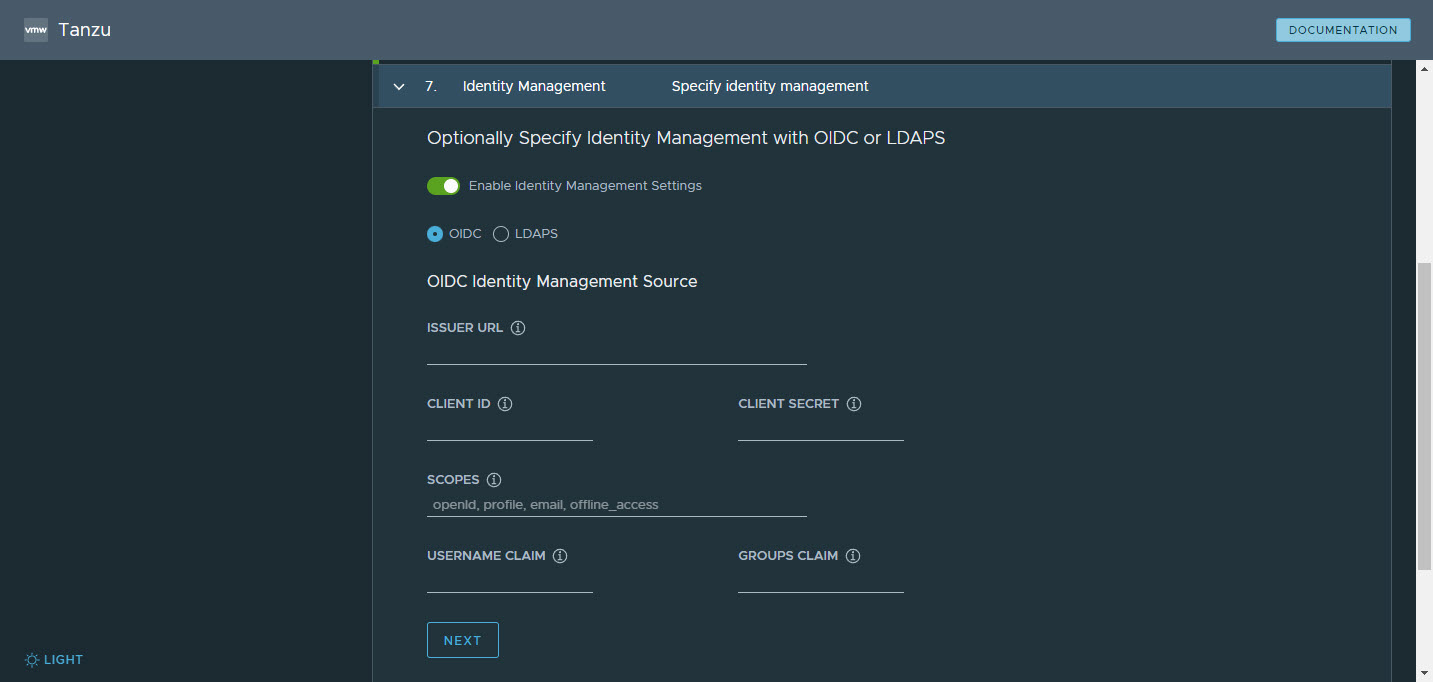

Identify Management

- Tanzu Kubernetes Grid가 ID 관리를 구현하는 방법

- ODIC, LDAP 두가지 방법이 있으며, 테스트 환경에서는 구현하지 않으므로 설정 skip.

-

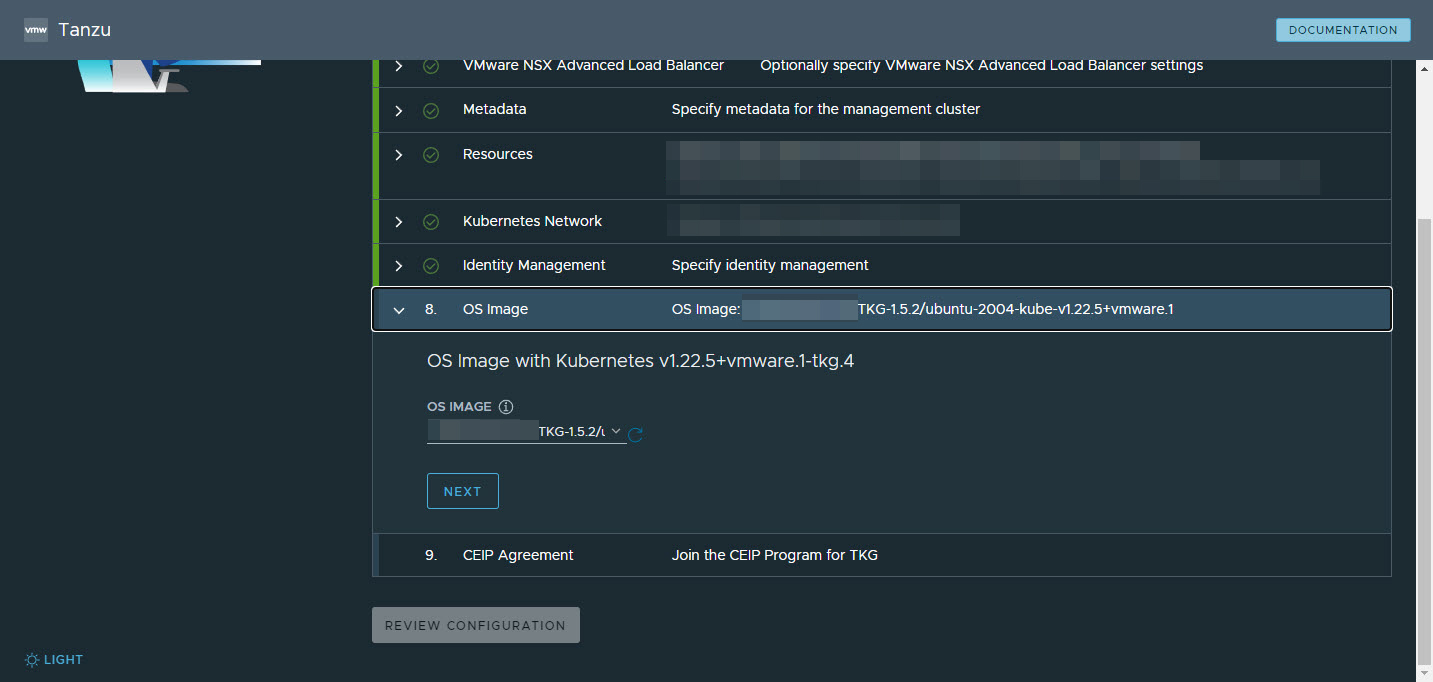

OS Image

- Tanzu Kubernetes Grid VM 배포에 사용할 OS 및 Kubernetes 버전 이미지 템플릿을 선택

- 이전에 템플릿으로 생성한 ‘ubuntu-2004-kube-v1.22.5+vmware.1’을 사용

-

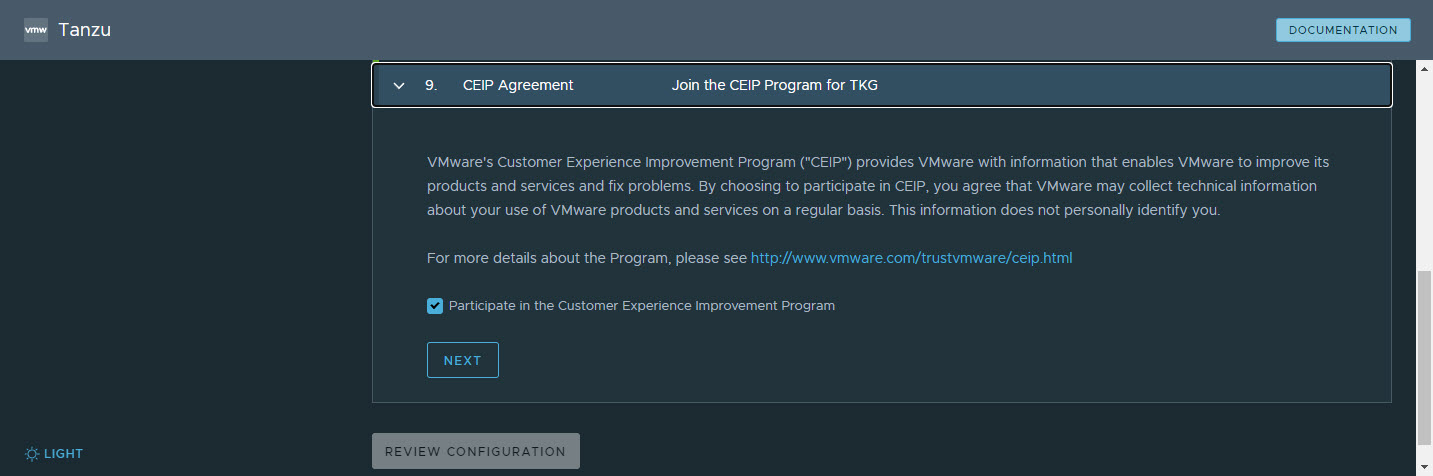

CEIP Agreement

- VMware 고객 환경 개선 프로그램에 참여하려면 활성화

-

REVIEW CONFIGURATION

- 현재까지 구성된 내용을 확인하고 배포하면 management cluster가 배포됨.

- 설정을 변경하려면 ‘EDIT CONFIGURATION’을 클릭하여 변경.

-

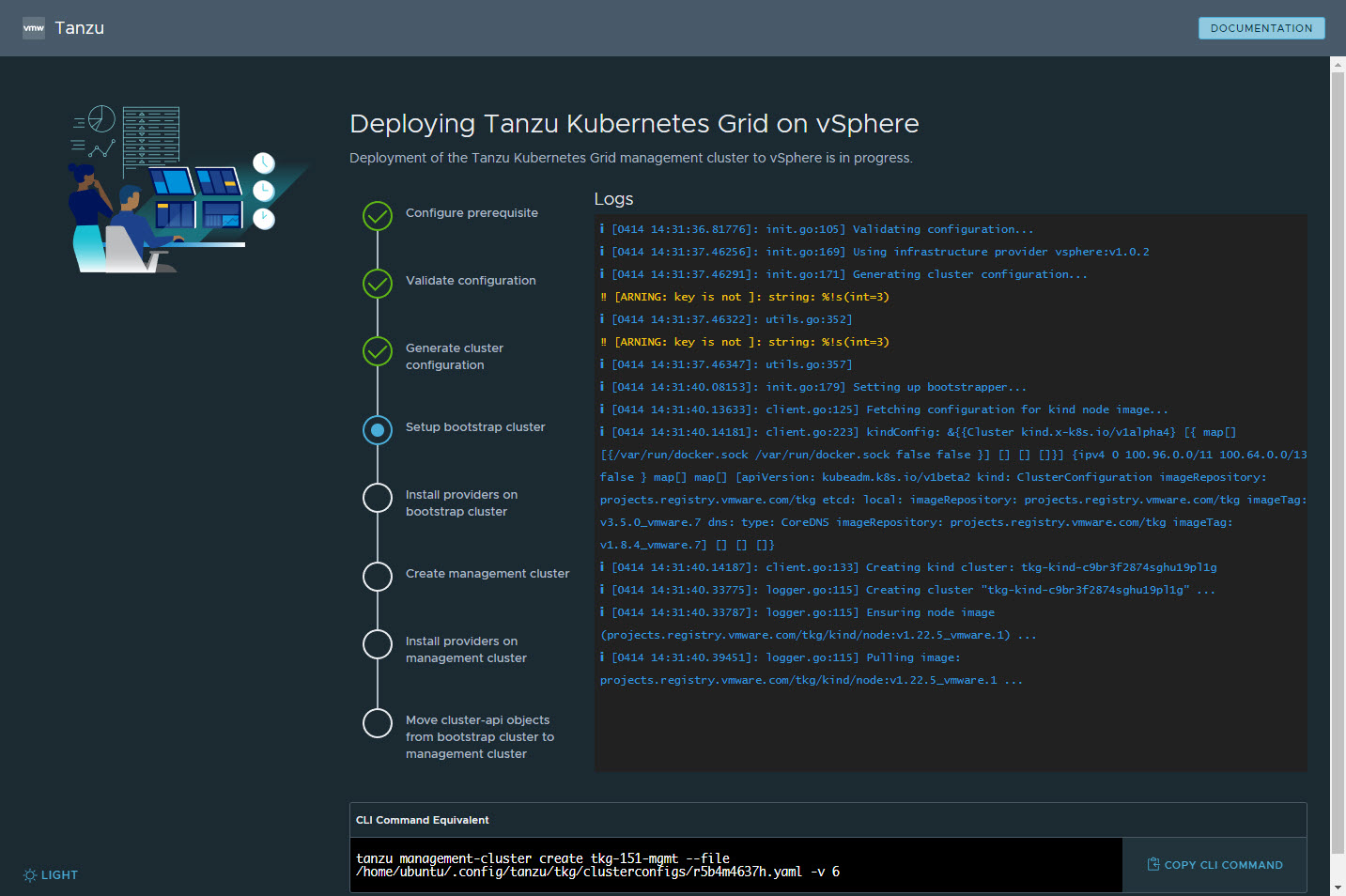

DEPLOYMENT MANAGEMENT CLUSTER

- UI에서 ‘DEPLOYMENT MANAGEMENT CLUSTER’을 클릭하여 배포할 수 있고,

- CLI Command를 통해서 배포할 수 있음.

-

management cluster 설치 결과

-

설치가 완료되면 kubeconfig로 mgmt cluster를 확인한다.

$ tanzu mc create --ui --bind 192.168.230.25:8080 --browser none Validating the pre-requisites... Serving kickstart UI at http://192.168.230.25:8080 Identity Provider not configured. Some authentication features won't work. WARNING: key is not a string: %!s(int=3)WARNING: key is not a string: %!s(int=3)Validating configuration... web socket connection established sending pending 2 logs to UI Using infrastructure provider vsphere:v1.0.2 Generating cluster configuration... WARNING: key is not a string: %!s(int=3)WARNING: key is not a string: %!s(int=3)Setting up bootstrapper... Bootstrapper created. Kubeconfig: /home/ubuntu/.kube-tkg/tmp/config_DrgIc5JN Installing providers on bootstrapper... Fetching providers Installing cert-manager Version="v1.5.3" Waiting for cert-manager to be available... Installing Provider="cluster-api" Version="v1.0.1" TargetNamespace="capi-system" Installing Provider="bootstrap-kubeadm" Version="v1.0.1" TargetNamespace="capi-kubeadm-bootstrap-system" Installing Provider="control-plane-kubeadm" Version="v1.0.1" TargetNamespace="capi-kubeadm-control-plane-system" Installing Provider="infrastructure-vsphere" Version="v1.0.2" TargetNamespace="capv-system" Start creating management cluster... [cluster control plane is still being initialized: WaitingForControlPlane, cluster infrastructure is still being provisioned: WaitingForControlPlane] cluster control plane is still being initialized: ScalingUp Saving management cluster kubeconfig into /home/ubuntu/.kube/config Installing providers on management cluster... Fetching providers Installing cert-manager Version="v1.5.3" I0414 14:56:13.132510 52084 request.go:665] Waited for 1.011137579s due to client-side throttling, not priority and fairness, request: GET:https://192.168.230.12:6443/apis/clusterctl.cluster.x-k8s.io/v1alpha3?timeout=30s Waiting for cert-manager to be available... Installing Provider="cluster-api" Version="v1.0.1" TargetNamespace="capi-system" I0414 14:56:38.824003 52084 request.go:665] Waited for 1.011406255s due to client-side throttling, not priority and fairness, request: GET:https://192.168.230.12:6443/apis/networking.k8s.io/v1?timeout=30s Installing Provider="bootstrap-kubeadm" Version="v1.0.1" TargetNamespace="capi-kubeadm-bootstrap-system" Installing Provider="control-plane-kubeadm" Version="v1.0.1" TargetNamespace="capi-kubeadm-control-plane-system" Installing Provider="infrastructure-vsphere" Version="v1.0.2" TargetNamespace="capv-system" Waiting for the management cluster to get ready for move... Waiting for addons installation... Moving all Cluster API objects from bootstrap cluster to management cluster... Performing move... Discovering Cluster API objects Moving Cluster API objects Clusters=1 Creating objects in the target cluster Deleting objects from the source cluster Waiting for additional components to be up and running... Waiting for packages to be up and running... You can now access the management cluster tkg-151-mgmt by running 'kubectl config use-context tkg-151-mgmt-admin@tkg-151-mgmt' Management cluster created! You can now create your first workload cluster by running the following: tanzu cluster create [name] -f [file] [ubuntu@tkg-jumpbox ~]$ -

kubectl cli로 mgmt cluster 확인

$ k config get-contexts CURRENT NAME CLUSTER AUTHINFO NAMESPACE * tkg-151-mgmt-admin@tkg-151-mgmt tkg-151-mgmt tkg-151-mgmt-admin $ k get node NAME STATUS ROLES AGE VERSION tkg-151-mgmt-control-plane-8zc5k Ready control-plane,master 33m v1.22.5+vmware.1 tkg-151-mgmt-control-plane-fn8l4 Ready control-plane,master 37m v1.22.5+vmware.1 tkg-151-mgmt-control-plane-nkvg4 Ready control-plane,master 16m v1.22.5+vmware.1 tkg-151-mgmt-md-0-548ccb665c-x97sz Ready <none> 36m v1.22.5+vmware.1 tkg-151-mgmt-md-1-64c8f9688f-jphht Ready <none> 36m v1.22.5+vmware.1 tkg-151-mgmt-md-2-bd8bffd75-825b9 Ready <none> 36m v1.22.5+vmware.1

-

Deploy Tanzu Kubernetes Clusters

Deploy a Workload Cluster: Basic Process

workload cluster를 배포할 때 cluster에 대한 대부분의 구성은 managetment cluster 구성과 동일.

workload cluster 대한 yaml 파일을 만드는 가장 쉬운 방법은 management cluster의 yaml 파일을 복사해서 사용.

-

management cluster yaml 파일

- default 설정으로 management cluster를 설치하였다면 아래 경로에서 mgmt cluster의 yaml 파일 확인 가능.

- $HOME/.config/tanzu/tkg/clusterconfigs

- mgmt cluster 설치했을때 사용한 yaml 파일을 workload cluster 배포용으로 복사.

-

workload cluster yaml 수정

- CLUSTER_NAME: workload cluster name으로 수정

- VSPHERE_CONTROL_PLANE_ENDPOINT : workload cluster kube_vip로 수정

- CONTROL_PLANE_MACHINE_COUNT: 3

- WORKER_MACHINE_COUNT: 3

- 이정도 설정만 추가하고 배포하면 cluster 생성됨.

-

Deploy Workload Cluster

-

tanzu login

[ubuntu@tkg-jumpbox /app/tkg/cli]$ $ tanzu login ? Select a server tkg-151-mgmt () ✔ successfully logged in to management cluster using the kubeconfig tkg-151-mgmt Checking for required plugins... Installing plugin 'cluster:v0.11.2' Installing plugin 'kubernetes-release:v0.11.2' Successfully installed all required plugins [ubuntu@tkg-jumpbox /app/tkg/cli]$ -

tanzu cluster create

$ tanzu cluster create -f /home/ubuntu/.config/tanzu/tkg/clusterconfigs/dev-cluster.yaml -v 9 -

생성 로그 확인

[ubuntu@tkg-jumpbox ~/.config/tanzu/tkg/clusterconfigs]$ $ tanzu cluster create -f /home/ubuntu/.config/tanzu/tkg/clusterconfigs/dev-cluster.yaml -v 9 compatibility file (/home/ubuntu/.config/tanzu/tkg/compatibility/tkg-compatibility.yaml) already exists, skipping download BOM files inside /home/ubuntu/.config/tanzu/tkg/bom already exists, skipping download Using namespace from config: Validating configuration... Waiting for resource pinniped-info of type *v1.ConfigMap to be up and running configmaps "pinniped-info" not found, retrying configmaps "pinniped-info" not found, retrying configmaps "pinniped-info" not found, retrying configmaps "pinniped-info" not found, retrying configmaps "pinniped-info" not found, retrying configmaps "pinniped-info" not found, retrying configmaps "pinniped-info" not found, retrying Warning: Pinniped configuration not found. Skipping pinniped configuration in workload cluster. Please refer to the documentation to check if you can configure pinniped on workload cluster manually Creating workload cluster 'tkg-151-prod'... patch cluster object with operation status: { "metadata": { "annotations": { "TKGOperationInfo" : "{\"Operation\":\"Create\",\"OperationStartTimestamp\":\"2022-04-14 08:21:29.999298272 +0000 UTC\",\"OperationTimeout\":1800}", "TKGOperationLastObservedTimestamp" : "2022-04-14 08:21:29.999298272 +0000 UTC" } } } Waiting for cluster to be initialized... cluster state is unchanged 1 cluster control plane is still being initialized: WaitingForControlPlane cluster control plane is still being initialized: WaitingForControlPlane, retrying cluster control plane is still being initialized: ScalingUp cluster control plane is still being initialized: ScalingUp, retrying cluster state is unchanged 1 cluster control plane is still being initialized: ScalingUp, retrying cluster state is unchanged 2 cluster control plane is still being initialized: ScalingUp, retrying cluster control plane is still being initialized: ScalingUp, retrying cluster control plane is still being initialized: ScalingUp, retrying cluster state is unchanged 1 cluster control plane is still being initialized: ScalingUp, retrying cluster state is unchanged 2 cluster control plane is still being initialized: ScalingUp, retrying cluster control plane is still being initialized: ScalingUp, retrying cluster state is unchanged 1 cluster control plane is still being initialized: ScalingUp, retrying cluster state is unchanged 2 cluster control plane is still being initialized: ScalingUp, retrying cluster control plane is still being initialized: ScalingUp, retrying cluster state is unchanged 1 cluster control plane is still being initialized: ScalingUp, retrying cluster state is unchanged 2 cluster control plane is still being initialized: ScalingUp, retrying cluster control plane is still being initialized: ScalingUp, retrying cluster state is unchanged 1 cluster control plane is still being initialized: ScalingUp, retrying cluster state is unchanged 2 cluster control plane is still being initialized: ScalingUp, retrying cluster state is unchanged 3 cluster control plane is still being initialized: ScalingUp, retrying cluster state is unchanged 4 cluster control plane is still being initialized: ScalingUp, retrying Getting secret for cluster Waiting for resource tkg-151-prod-kubeconfig of type *v1.Secret to be up and running Waiting for cluster nodes to be available... Waiting for resource tkg-151-prod of type *v1beta1.Cluster to be up and running Waiting for resources type *v1beta1.KubeadmControlPlaneList to be up and running control-plane is still creating replicas, DesiredReplicas=3 Replicas=3 ReadyReplicas=2 UpdatedReplicas=3, retrying Waiting for resources type *v1beta1.MachineDeploymentList to be up and running Waiting for resources type *v1beta1.MachineList to be up and running Waiting for addons installation... Waiting for resources type *v1beta1.ClusterResourceSetList to be up and running Waiting for resource antrea-controller of type *v1.Deployment to be up and running Waiting for packages to be up and running... Waiting for package: antrea Waiting for package: metrics-server Waiting for package: secretgen-controller Waiting for package: vsphere-cpi Waiting for package: vsphere-csi Waiting for resource vsphere-csi of type *v1alpha1.PackageInstall to be up and running Waiting for resource secretgen-controller of type *v1alpha1.PackageInstall to be up and running Waiting for resource metrics-server of type *v1alpha1.PackageInstall to be up and running Waiting for resource antrea of type *v1alpha1.PackageInstall to be up and running Waiting for resource vsphere-cpi of type *v1alpha1.PackageInstall to be up and running waiting for 'antrea' Package to be installed, retrying Successfully reconciled package: vsphere-cpi Successfully reconciled package: metrics-server Successfully reconciled package: secretgen-controller waiting for 'vsphere-csi' Package to be installed, retrying Successfully reconciled package: antrea package reconciliation failed: I0414 08:25:41.994635 588 request.go:665] Waited for 1.03525011s due to client-side throttling, not priority and fairness, request: GET:https://100.64.0.1:443/api/v1?timeout=32s I0414 08:25:51.996727 588 request.go:665] Waited for 1.398634752s due to client-side throttling, not priority and fairness, request: GET:https://100.64.0.1:443/apis/security.antrea.tanzu.vmware.com/v1alpha1/tiers?labelSelector=kapp.k14s.io%2Fapp%3D1649924654718161801 I0414 08:26:02.043411 588 request.go:665] Waited for 4.94531621s due to client-side throttling, not priority and fairness, request: GET:https://100.64.0.1:443/apis/metrics.k8s.io/v1beta1?timeout=32s I0414 08:26:12.092838 588 request.go:665] Waited for 1.345963645s due to client-side throttling, not priority and fairness, request: GET:https://100.64.0.1:443/apis/discovery.k8s.io/v1?timeout=32s I0414 08:26:22.791707 588 request.go:665] Waited for 1.047724876s due to client-side throttling, not priority and fairness, request: GET:https://100.64.0.1:443/apis/apiextensions.k8s.io/v1?timeout=32s kapp: Error: Timed out waiting after 30s, retrying package reconciliation failed: I0414 08:25:41.994635 588 request.go:665] Waited for 1.03525011s due to client-side throttling, not priority and fairness, request: GET:https://100.64.0.1:443/api/v1?timeout=32s I0414 08:25:51.996727 588 request.go:665] Waited for 1.398634752s due to client-side throttling, not priority and fairness, request: GET:https://100.64.0.1:443/apis/security.antrea.tanzu.vmware.com/v1alpha1/tiers?labelSelector=kapp.k14s.io%2Fapp%3D1649924654718161801 I0414 08:26:02.043411 588 request.go:665] Waited for 4.94531621s due to client-side throttling, not priority and fairness, request: GET:https://100.64.0.1:443/apis/metrics.k8s.io/v1beta1?timeout=32s I0414 08:26:12.092838 588 request.go:665] Waited for 1.345963645s due to client-side throttling, not priority and fairness, request: GET:https://100.64.0.1:443/apis/discovery.k8s.io/v1?timeout=32s I0414 08:26:22.791707 588 request.go:665] Waited for 1.047724876s due to client-side throttling, not priority and fairness, request: GET:https://100.64.0.1:443/apis/apiextensions.k8s.io/v1?timeout=32s kapp: Error: Timed out waiting after 30s, retrying waiting for 'vsphere-csi' Package to be installed, retrying Successfully reconciled package: vsphere-csi Workload cluster 'tkg-151-prod' created [ubuntu@tkg-jumpbox ~/.config/tanzu/tkg/clusterconfigs]$ -

workload cluster kubeconfig

$ tanzu cluster kubeconfig get tkg-151-prod --admin Credentials of cluster 'tkg-151-prod' have been saved You can now access the cluster by running 'kubectl config use-context tkg-151-prod-admin@tkg-151-prod' $ k config get-contexts CURRENT NAME CLUSTER AUTHINFO NAMESPACE * tkg-151-mgmt-admin@tkg-151-mgmt tkg-151-mgmt tkg-151-mgmt-admin tkg-151-prod-admin@tkg-151-prod tkg-151-prod tkg-151-prod-admin

-

댓글남기기